【25】ReLU 家族评比 个别使用ReLU LeakyReLU PReLU SELU 来训练

今天要来实验不同的 ReLU 家族的评比,挑战者有

- 基本 ReLU

- 尾巴翘起来的 Leaky ReLU

- 带有参数控制尾巴的 PReLU

- 比较新潮的 SELU

说真的,有那麽多 ReLU,但我自己实务上却只使用过第一种基本款,所以我也蛮好奇这次实验会有多大的对比。

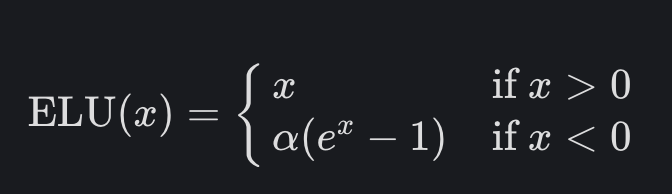

而因为这次想跑多次的 epochs 来进行实验,所以资料集的部分改用 beans 来实验。

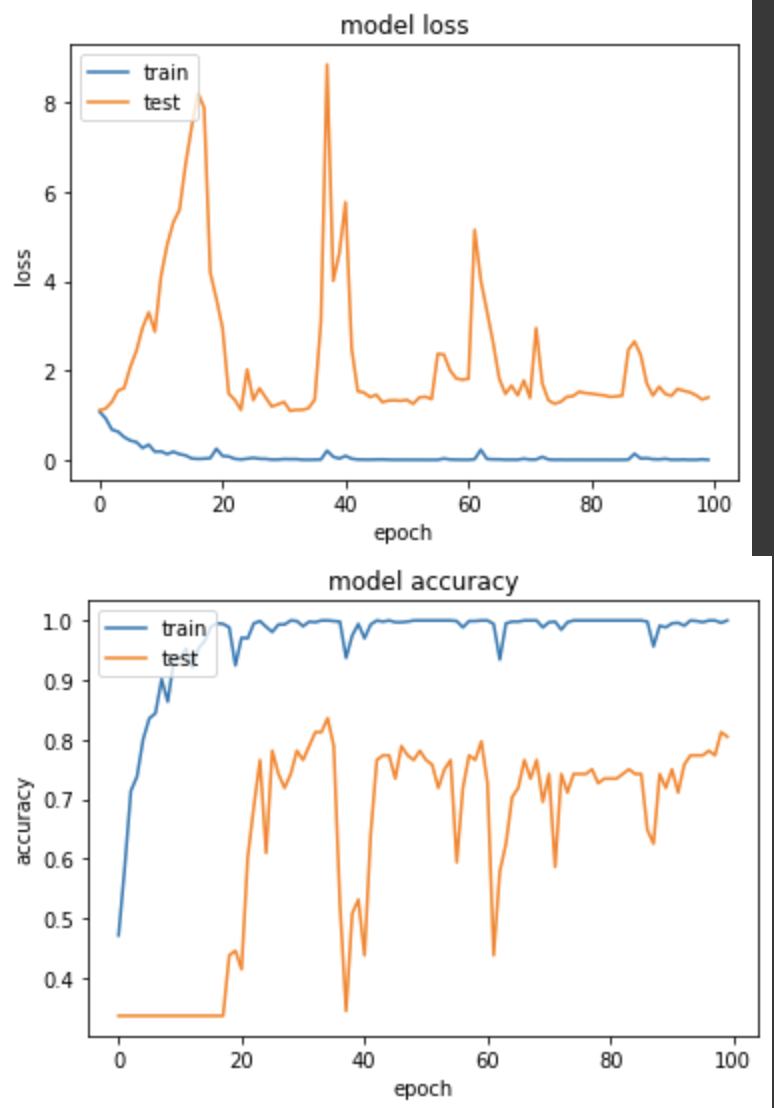

实验一:基本 ReLU

def bottleneck(net, filters, out_ch, strides, shortcut=True, zero_pad=False):

padding = 'valid' if zero_pad else 'same'

shortcut_net = net

net = tf.keras.layers.Conv2D(filters * 6, 1, use_bias=False, padding='same')(net)

net = tf.keras.layers.BatchNormalization()(net)

net = tf.keras.layers.ReLU(max_value=6)(net)

if zero_pad:

net = tf.keras.layers.ZeroPadding2D(padding=((0, 1), (0, 1)))(net)

net = tf.keras.layers.DepthwiseConv2D(3, strides=strides, use_bias=False, padding=padding)(net)

net = tf.keras.layers.BatchNormalization()(net)

net = tf.keras.layers.ReLU(max_value=6)(net)

net = tf.keras.layers.Conv2D(out_ch, 1, use_bias=False, padding='same')(net)

net = tf.keras.layers.BatchNormalization()(net)

if shortcut:

net = tf.keras.layers.Add()([net, shortcut_net])

return net

def get_mobilenetV2_relu(shape):

input_node = tf.keras.layers.Input(shape=shape)

net = tf.keras.layers.Conv2D(32, 3, (2, 2), use_bias=False, padding='same')(input_node)

net = tf.keras.layers.BatchNormalization()(net)

net = tf.keras.layers.ReLU(max_value=6)(net)

net = tf.keras.layers.DepthwiseConv2D(3, use_bias=False, padding='same')(net)

net = tf.keras.layers.BatchNormalization()(net)

net = tf.keras.layers.ReLU(max_value=6)(net)

net = tf.keras.layers.Conv2D(16, 1, use_bias=False, padding='same')(net)

net = tf.keras.layers.BatchNormalization()(net)

net = bottleneck(net, 16, 24, (2, 2), shortcut=False, zero_pad=True) # block_1

net = bottleneck(net, 24, 24, (1, 1), shortcut=True) # block_2

net = bottleneck(net, 24, 32, (2, 2), shortcut=False, zero_pad=True) # block_3

net = bottleneck(net, 32, 32, (1, 1), shortcut=True) # block_4

net = bottleneck(net, 32, 32, (1, 1), shortcut=True) # block_5

net = bottleneck(net, 32, 64, (2, 2), shortcut=False, zero_pad=True) # block_6

net = bottleneck(net, 64, 64, (1, 1), shortcut=True) # block_7

net = bottleneck(net, 64, 64, (1, 1), shortcut=True) # block_8

net = bottleneck(net, 64, 64, (1, 1), shortcut=True) # block_9

net = bottleneck(net, 64, 96, (1, 1), shortcut=False) # block_10

net = bottleneck(net, 96, 96, (1, 1), shortcut=True) # block_11

net = bottleneck(net, 96, 96, (1, 1), shortcut=True) # block_12

net = bottleneck(net, 96, 160, (2, 2), shortcut=False, zero_pad=True) # block_13

net = bottleneck(net, 160, 160, (1, 1), shortcut=True) # block_14

net = bottleneck(net, 160, 160, (1, 1), shortcut=True) # block_15

net = bottleneck(net, 160, 320, (1, 1), shortcut=False) # block_16

net = tf.keras.layers.Conv2D(1280, 1, use_bias=False, padding='same')(net)

net = tf.keras.layers.BatchNormalization()(net)

net = tf.keras.layers.ReLU(max_value=6)(net)

return input_node, net

input_node, net = get_mobilenetV2_relu((224,224,3))

net = tf.keras.layers.GlobalAveragePooling2D()(net)

net = tf.keras.layers.Dense(NUM_OF_CLASS)(net)

model = tf.keras.Model(inputs=[input_node], outputs=[net])

model.compile(

optimizer=tf.keras.optimizers.SGD(LR),

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=[tf.keras.metrics.SparseCategoricalAccuracy()],

)

history = model.fit(

ds_train,

epochs=EPOCHS,

validation_data=ds_test,

verbose=True)

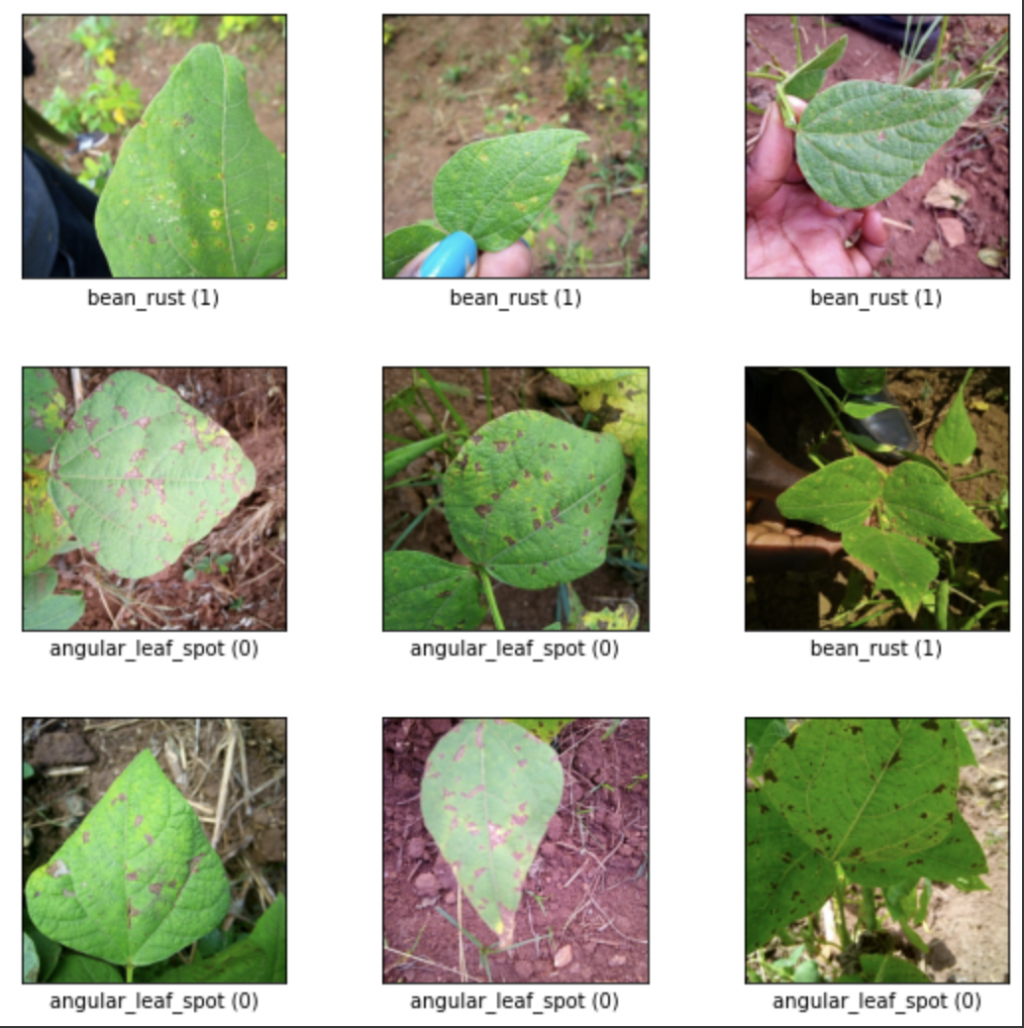

产出:

Epoch 96/100

loss: 0.0074 - sparse_categorical_accuracy: 0.9961 - val_loss: 0.9123 - val_sparse_categorical_accuracy: 0.8516

实验二:Leaky ReLU

一般的 ReLU 遇到输入为负值时,输出会变成0,很容易让大半的节点死亡,因此我们在输入负值时,仍然给它一个 α 值,让他微分时仍然可以更新权重,在这边我们用预设的0.3。

def bottleneck(net, filters, out_ch, strides, shortcut=True, zero_pad=False):

padding = 'valid' if zero_pad else 'same'

shortcut_net = net

net = tf.keras.layers.Conv2D(filters * 6, 1, use_bias=False, padding='same')(net)

net = tf.keras.layers.BatchNormalization()(net)

net = tf.keras.layers.LeakyReLU()(net)

if zero_pad:

net = tf.keras.layers.ZeroPadding2D(padding=((0, 1), (0, 1)))(net)

net = tf.keras.layers.DepthwiseConv2D(3, strides=strides, use_bias=False, padding=padding)(net)

net = tf.keras.layers.BatchNormalization()(net)

net = tf.keras.layers.LeakyReLU()(net)

net = tf.keras.layers.Conv2D(out_ch, 1, use_bias=False, padding='same')(net)

net = tf.keras.layers.BatchNormalization()(net)

if shortcut:

net = tf.keras.layers.Add()([net, shortcut_net])

return net

def get_mobilenetV2_leakyrelu(shape):

input_node = tf.keras.layers.Input(shape=shape)

net = tf.keras.layers.Conv2D(32, 3, (2, 2), use_bias=False, padding='same')(input_node)

net = tf.keras.layers.BatchNormalization()(net)

net = tf.keras.layers.LeakyReLU()(net)

net = tf.keras.layers.DepthwiseConv2D(3, use_bias=False, padding='same')(net)

net = tf.keras.layers.BatchNormalization()(net)

net = tf.keras.layers.LeakyReLU()(net)

net = tf.keras.layers.Conv2D(16, 1, use_bias=False, padding='same')(net)

net = tf.keras.layers.BatchNormalization()(net)

net = bottleneck(net, 16, 24, (2, 2), shortcut=False, zero_pad=True) # block_1

net = bottleneck(net, 24, 24, (1, 1), shortcut=True) # block_2

net = bottleneck(net, 24, 32, (2, 2), shortcut=False, zero_pad=True) # block_3

net = bottleneck(net, 32, 32, (1, 1), shortcut=True) # block_4

net = bottleneck(net, 32, 32, (1, 1), shortcut=True) # block_5

net = bottleneck(net, 32, 64, (2, 2), shortcut=False, zero_pad=True) # block_6

net = bottleneck(net, 64, 64, (1, 1), shortcut=True) # block_7

net = bottleneck(net, 64, 64, (1, 1), shortcut=True) # block_8

net = bottleneck(net, 64, 64, (1, 1), shortcut=True) # block_9

net = bottleneck(net, 64, 96, (1, 1), shortcut=False) # block_10

net = bottleneck(net, 96, 96, (1, 1), shortcut=True) # block_11

net = bottleneck(net, 96, 96, (1, 1), shortcut=True) # block_12

net = bottleneck(net, 96, 160, (2, 2), shortcut=False, zero_pad=True) # block_13

net = bottleneck(net, 160, 160, (1, 1), shortcut=True) # block_14

net = bottleneck(net, 160, 160, (1, 1), shortcut=True) # block_15

net = bottleneck(net, 160, 320, (1, 1), shortcut=False) # block_16

net = tf.keras.layers.Conv2D(1280, 1, use_bias=False, padding='same')(net)

net = tf.keras.layers.BatchNormalization()(net)

net = tf.keras.layers.LeakyReLU()(net)

return input_node, net

input_node, net = get_mobilenetV2_leakyrelu((224,224,3))

net = tf.keras.layers.GlobalAveragePooling2D()(net)

net = tf.keras.layers.Dense(NUM_OF_CLASS)(net)

model = tf.keras.Model(inputs=[input_node], outputs=[net])

model.compile(

optimizer=tf.keras.optimizers.SGD(LR),

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=[tf.keras.metrics.SparseCategoricalAccuracy()],

)

history = model.fit(

ds_train,

epochs=EPOCHS,

validation_data=ds_test,

verbose=True)

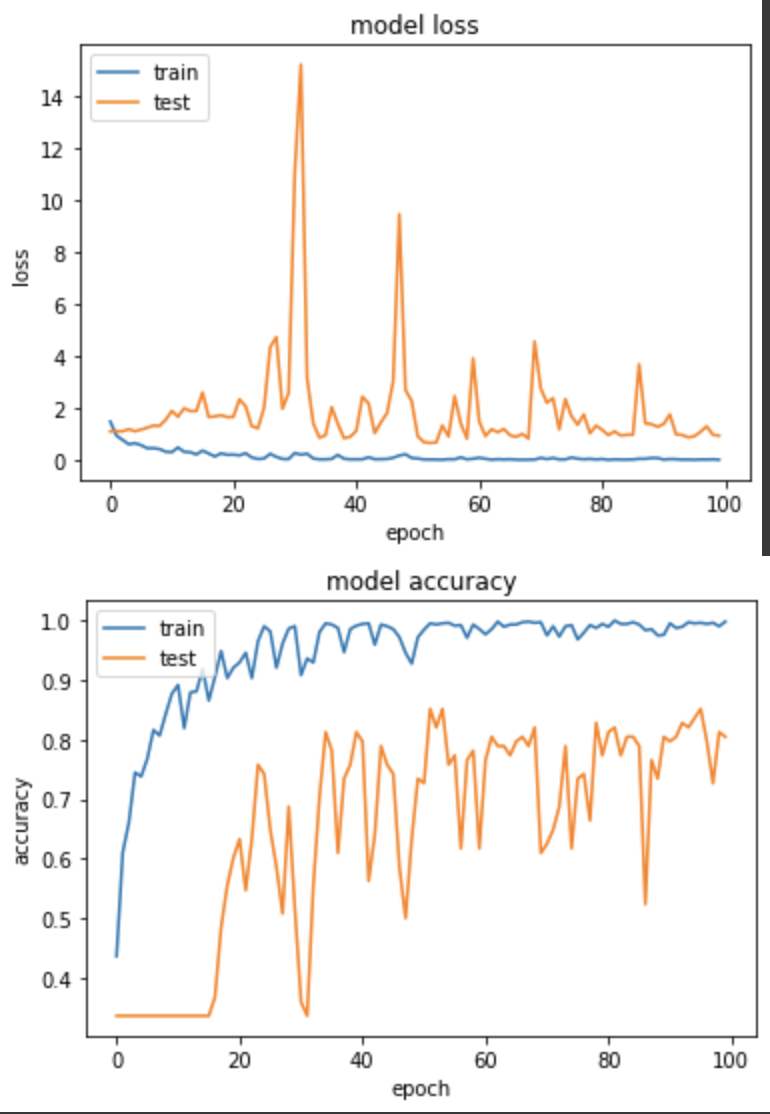

产出:

Epoch 96/100

loss: 0.0074 - sparse_categorical_accuracy: 0.9981 - val_loss: 1.2042 - val_sparse_categorical_accuracy: 0.7969

实验三:PReLU

有了 LeakyReLU 後,就会有人觉得给予固定的 α 值似乎让模型缺乏了弹性,因此不如让这个值也变成模型学习的参数,所以就有了 PReLU,在这边我们让他的初始值为0.25。

def bottleneck(net, filters, out_ch, strides, shortcut=True, zero_pad=False):

padding = 'valid' if zero_pad else 'same'

shortcut_net = net

net = tf.keras.layers.Conv2D(filters * 6, 1, use_bias=False, padding='same')(net)

net = tf.keras.layers.BatchNormalization()(net)

net = tf.keras.layers.PReLU(alpha_initializer=tf.initializers.constant(0.25))(net)

if zero_pad:

net = tf.keras.layers.ZeroPadding2D(padding=((0, 1), (0, 1)))(net)

net = tf.keras.layers.DepthwiseConv2D(3, strides=strides, use_bias=False, padding=padding)(net)

net = tf.keras.layers.BatchNormalization()(net)

net = tf.keras.layers.PReLU(alpha_initializer=tf.initializers.constant(0.25))(net)

net = tf.keras.layers.Conv2D(out_ch, 1, use_bias=False, padding='same')(net)

net = tf.keras.layers.BatchNormalization()(net)

if shortcut:

net = tf.keras.layers.Add()([net, shortcut_net])

return net

def get_mobilenetV2_prelu(shape):

input_node = tf.keras.layers.Input(shape=shape)

net = tf.keras.layers.Conv2D(32, 3, (2, 2), use_bias=False, padding='same')(input_node)

net = tf.keras.layers.BatchNormalization()(net)

net = tf.keras.layers.PReLU(alpha_initializer=tf.initializers.constant(0.25))(net)

net = tf.keras.layers.DepthwiseConv2D(3, use_bias=False, padding='same')(net)

net = tf.keras.layers.BatchNormalization()(net)

net = tf.keras.layers.PReLU(alpha_initializer=tf.initializers.constant(0.25))(net)

net = tf.keras.layers.Conv2D(16, 1, use_bias=False, padding='same')(net)

net = tf.keras.layers.BatchNormalization()(net)

net = bottleneck(net, 16, 24, (2, 2), shortcut=False, zero_pad=True) # block_1

net = bottleneck(net, 24, 24, (1, 1), shortcut=True) # block_2

net = bottleneck(net, 24, 32, (2, 2), shortcut=False, zero_pad=True) # block_3

net = bottleneck(net, 32, 32, (1, 1), shortcut=True) # block_4

net = bottleneck(net, 32, 32, (1, 1), shortcut=True) # block_5

net = bottleneck(net, 32, 64, (2, 2), shortcut=False, zero_pad=True) # block_6

net = bottleneck(net, 64, 64, (1, 1), shortcut=True) # block_7

net = bottleneck(net, 64, 64, (1, 1), shortcut=True) # block_8

net = bottleneck(net, 64, 64, (1, 1), shortcut=True) # block_9

net = bottleneck(net, 64, 96, (1, 1), shortcut=False) # block_10

net = bottleneck(net, 96, 96, (1, 1), shortcut=True) # block_11

net = bottleneck(net, 96, 96, (1, 1), shortcut=True) # block_12

net = bottleneck(net, 96, 160, (2, 2), shortcut=False, zero_pad=True) # block_13

net = bottleneck(net, 160, 160, (1, 1), shortcut=True) # block_14

net = bottleneck(net, 160, 160, (1, 1), shortcut=True) # block_15

net = bottleneck(net, 160, 320, (1, 1), shortcut=False) # block_16

net = tf.keras.layers.Conv2D(1280, 1, use_bias=False, padding='same',)(net)

net = tf.keras.layers.BatchNormalization()(net)

net = tf.keras.layers.PReLU(alpha_initializer=tf.initializers.constant(0.25))(net)

return input_node, net

input_node, net = get_mobilenetV2_prelu((224,224,3))

net = tf.keras.layers.GlobalAveragePooling2D()(net)

net = tf.keras.layers.Dense(NUM_OF_CLASS)(net)

model = tf.keras.Model(inputs=[input_node], outputs=[net])

model.compile(

optimizer=tf.keras.optimizers.SGD(LR),

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=[tf.keras.metrics.SparseCategoricalAccuracy()],

)

history = model.fit(

ds_train,

epochs=EPOCHS,

validation_data=ds_test,

verbose=True)

产出:

Epoch 99/100

loss: 0.0119 - sparse_categorical_accuracy: 0.9961 - val_loss: 1.3487 - val_sparse_categorical_accuracy: 0.8125

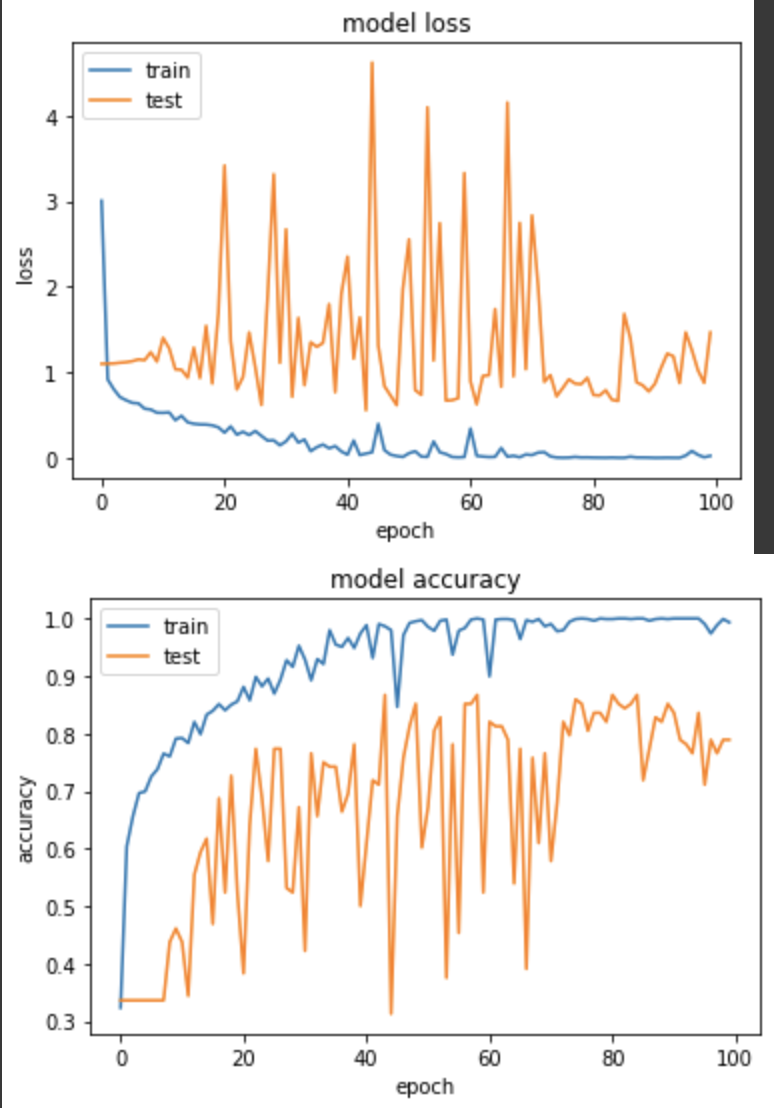

实验四:SELU

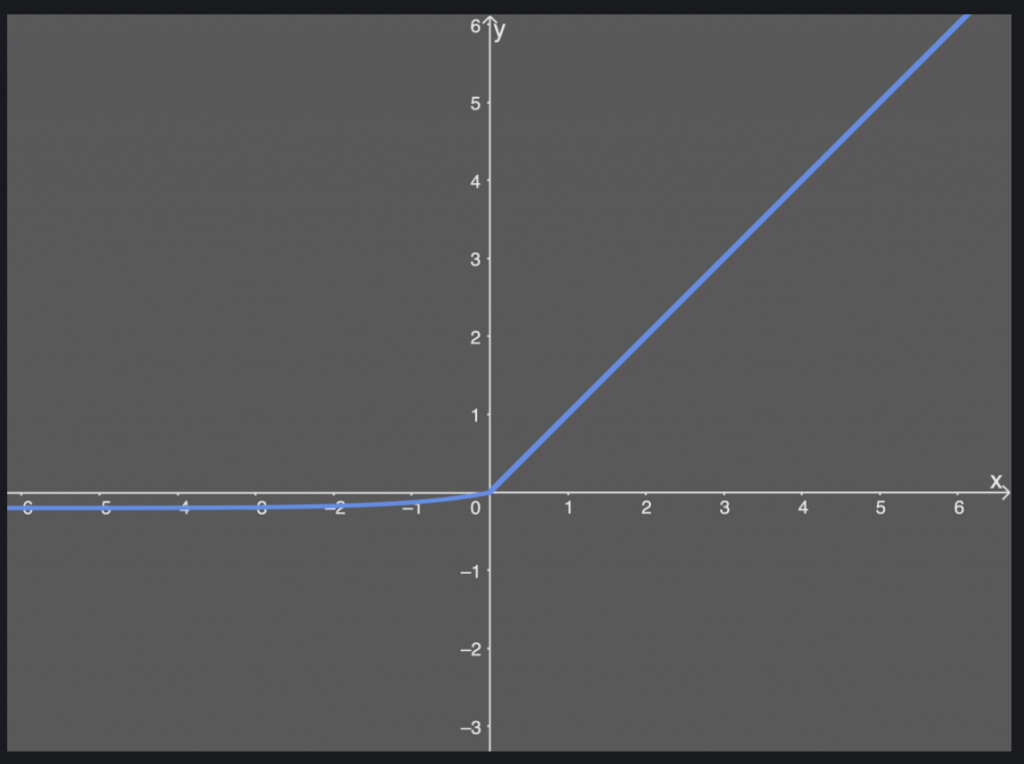

介绍 SELU 之前,要先知道他是 ELU 的一种,ELU在输入小於0时,会是一个 exponential 形状,所以在计算量上都会比较大,ELU 的公式如下:

而 SELU 公式和 ELU 差不多,只是原作者找到了一个确切的 α 数值和一个固定的 λ 值来做缩放,而且在使用这个 act func 时,前面的权重初始化要使用 lecun_normal,如果有用过 dropout 则是要替换成另一种 AlphaDropout。

def bottleneck(net, filters, out_ch, strides, shortcut=True, zero_pad=False):

padding = 'valid' if zero_pad else 'same'

shortcut_net = net

net = tf.keras.layers.Conv2D(filters * 6, 1, use_bias=False, padding='same', kernel_initializer='lecun_normal')(net)

net = tf.keras.layers.BatchNormalization()(net)

net = tf.keras.activations.selu(net)

if zero_pad:

net = tf.keras.layers.ZeroPadding2D(padding=((0, 1), (0, 1)))(net)

net = tf.keras.layers.DepthwiseConv2D(3, strides=strides, use_bias=False, padding=padding, kernel_initializer='lecun_normal')(net)

net = tf.keras.layers.BatchNormalization()(net)

net = tf.keras.activations.selu(net)

net = tf.keras.layers.Conv2D(out_ch, 1, use_bias=False, padding='same', kernel_initializer='lecun_normal')(net)

net = tf.keras.layers.BatchNormalization()(net)

if shortcut:

net = tf.keras.layers.Add()([net, shortcut_net])

return net

def get_mobilenetV2_selu(shape):

input_node = tf.keras.layers.Input(shape=shape)

net = tf.keras.layers.Conv2D(32, 3, (2, 2), use_bias=False, padding='same', kernel_initializer='lecun_normal')(input_node)

net = tf.keras.layers.BatchNormalization()(net)

net = tf.keras.activations.selu(net)

net = tf.keras.layers.DepthwiseConv2D(3, use_bias=False, padding='same', kernel_initializer='lecun_normal')(net)

net = tf.keras.layers.BatchNormalization()(net)

net = tf.keras.activations.selu(net)

net = tf.keras.layers.Conv2D(16, 1, use_bias=False, padding='same', kernel_initializer='lecun_normal')(net)

net = tf.keras.layers.BatchNormalization()(net)

net = bottleneck(net, 16, 24, (2, 2), shortcut=False, zero_pad=True) # block_1

net = bottleneck(net, 24, 24, (1, 1), shortcut=True) # block_2

net = bottleneck(net, 24, 32, (2, 2), shortcut=False, zero_pad=True) # block_3

net = bottleneck(net, 32, 32, (1, 1), shortcut=True) # block_4

net = bottleneck(net, 32, 32, (1, 1), shortcut=True) # block_5

net = bottleneck(net, 32, 64, (2, 2), shortcut=False, zero_pad=True) # block_6

net = bottleneck(net, 64, 64, (1, 1), shortcut=True) # block_7

net = bottleneck(net, 64, 64, (1, 1), shortcut=True) # block_8

net = bottleneck(net, 64, 64, (1, 1), shortcut=True) # block_9

net = bottleneck(net, 64, 96, (1, 1), shortcut=False) # block_10

net = bottleneck(net, 96, 96, (1, 1), shortcut=True) # block_11

net = bottleneck(net, 96, 96, (1, 1), shortcut=True) # block_12

net = bottleneck(net, 96, 160, (2, 2), shortcut=False, zero_pad=True) # block_13

net = bottleneck(net, 160, 160, (1, 1), shortcut=True) # block_14

net = bottleneck(net, 160, 160, (1, 1), shortcut=True) # block_15

net = bottleneck(net, 160, 320, (1, 1), shortcut=False) # block_16

net = tf.keras.layers.Conv2D(1280, 1, use_bias=False, padding='same', kernel_initializer='lecun_normal')(net)

net = tf.keras.layers.BatchNormalization()(net)

net = tf.keras.activations.selu(net)

return input_node, net

input_node, net = get_mobilenetV2_selu((224,224,3))

net = tf.keras.layers.GlobalAveragePooling2D()(net)

net = tf.keras.layers.Dense(NUM_OF_CLASS)(net)

model = tf.keras.Model(inputs=[input_node], outputs=[net])

model.compile(

optimizer=tf.keras.optimizers.SGD(LR),

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=[tf.keras.metrics.SparseCategoricalAccuracy()],

)

history = model.fit(

ds_train,

epochs=EPOCHS,

validation_data=ds_test,

verbose=True)

产出:

Epoch 85/100

loss: 0.0018 - sparse_categorical_accuracy: 1.0000 - val_loss: 0.6651 - val_sparse_categorical_accuracy: 0.8672

以上就是今天针对这四个不同的 ReLU 所做的实验,大致上都有得到不错的效果,今天如果你要处理的任务比较急或者是第一次训练的话,或许还是基本款的 ReLU 就好,如果是相比 Kaggle 这种必须计较分数的比赛,那就很推荐尝试一些新的手法来训练。

Day.24 「你点了按钮~同时也点了网页本身!」 —— JavaScript 事件冒泡(Event bubbling)

我们上一个篇章认识了绑定事件,了解到不管是什麽节点,都可以绑定事件 那为什麽 JavaScript...

D16/ 所以到底为什麽 remember 是 composable function? - @Composable 是什麽 part 2

今天大概会聊到的范围 compose runtime compose compiler 今天会更深...

微聊 术业有专攻 Blame要找对人

倒数第二天铁人赛了,微人今日不聊程序码了,想聊一下今天在预演的小插曲 先说明一下 我的队友们真的都很...

[重构倒数第11天] - 如何在 Vue 中写出高效能的网页渲染方式 ?

前言 该系列是为了让看过Vue官方文件或学过Vue但是却不知道怎麽下手去重构现在有的网站而去规画的系...

【把玩Azure DevOps】Day12 Artifacts应用:上传第一个nuget package

前一篇文章简单介绍了Azure DevOps Artifacts,知道了它就是用来存放私有套件的套件...