树选手3号:XGboost [python实例]

照着前几天的逻辑今天来用python执行xgboost,刚开始一样先写score function方便之後的比较:

#from sklearn.metrics import accuracy_score, confusion_matrix, precision_score, recall_score, f1_score

def score(m, x_train, y_train, x_test, y_test, train=True):

if train:

pred=m.predict(x_train)

print('Train Result:\n')

print(f"Accuracy Score: {accuracy_score(y_train, pred)*100:.2f}%")

print(f"Precision Score: {precision_score(y_train, pred)*100:.2f}%")

print(f"Recall Score: {recall_score(y_train, pred)*100:.2f}%")

print(f"F1 score: {f1_score(y_train, pred)*100:.2f}%")

print(f"Confusion Matrix:\n {confusion_matrix(y_train, pred)}")

elif train == False:

pred=m.predict(x_test)

print('Test Result:\n')

print(f"Accuracy Score: {accuracy_score(y_test, pred)*100:.2f}%")

print(f"Precision Score: {precision_score(y_test, pred)*100:.2f}%")

print(f"Recall Score: {recall_score(y_test, pred)*100:.2f}%")

print(f"F1 score: {f1_score(y_test, pred)*100:.2f}%")

print(f"Confusion Matrix:\n {confusion_matrix(y_test, pred)}")

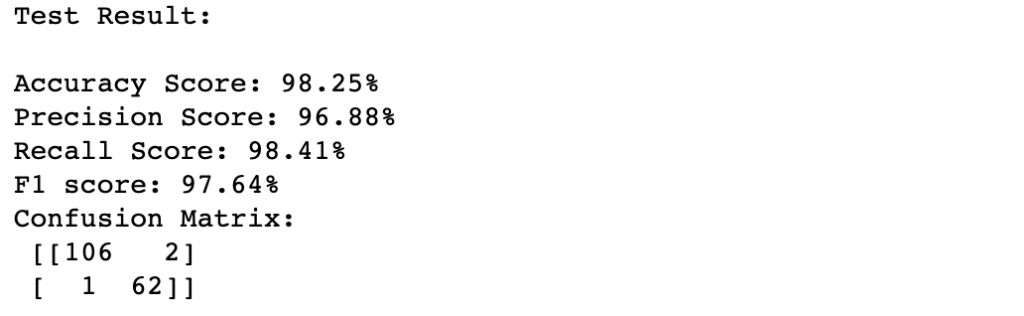

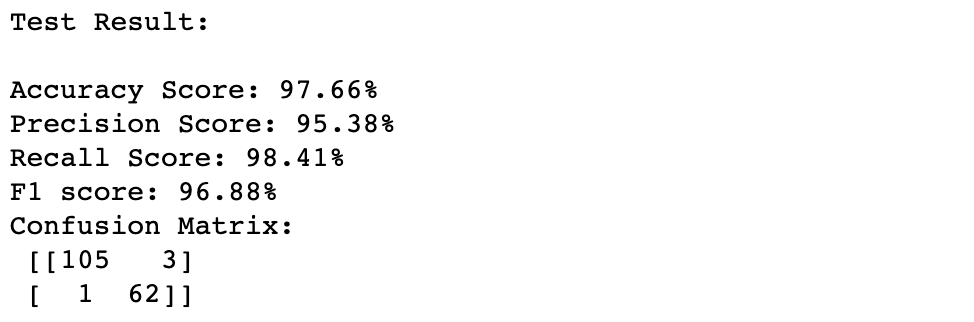

然後建立一个最简单的xgboost来看看(然後发现default的模型表现已经超过前天tuning後的random forest了):

from xgboost import XGBClassifier

xg1 = XGBClassifier()

xg1=xg1.fit(x_train, y_train)

score(xg1, x_train, y_train, x_test, y_test, train=False)

XGBClassifier tuning重要的参数有:

- n_estimators: 树的数量

- max_depth: 每颗树的最大深度

- learning_rate: 范围通常在0.01-0.2之间

- colsample_bytree:每次建树可以使用多少比例的features

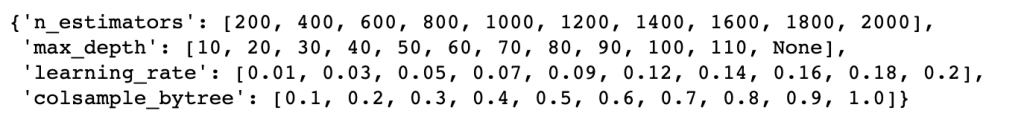

开始tuning,这里我们一样使用RandomizedSearchCV:

from sklearn.model_selection import RandomizedSearchCV

n_estimators = [int(x) for x in np.linspace(start=200, stop=2000, num=10)]

max_depth = [int(x) for x in np.linspace(10, 110, num=11)]

max_depth.append(None)

learning_rate=[round(float(x),2) for x in np.linspace(start=0.01, stop=0.2, num=10)]

colsample_bytree =[round(float(x),2) for x in np.linspace(start=0.1, stop=1, num=10)]

random_grid = {'n_estimators': n_estimators,

'max_depth': max_depth,

'learning_rate': learning_rate,

'colsample_bytree': colsample_bytree}

random_grid

xg4 = XGBClassifier(random_state=42)

#Random search of parameters, using 3 fold cross validation, search across 100 different combinations, and use all available cores

xg_random = RandomizedSearchCV(estimator = xg4, param_distributions=random_grid,

n_iter=100, cv=3, verbose=2, random_state=42, n_jobs=-1)

xg_random.fit(x_train,y_train)

xg_random.best_params_

xg5 = XGBClassifier(colsample_bytree= 0.2, learning_rate=0.09, max_depth= 10, n_estimators=1200)

xg5=xg5.fit(x_train, y_train)

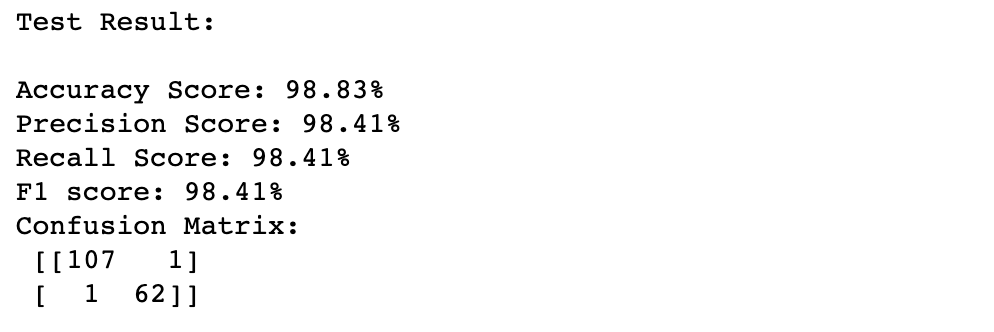

score(xg5, x_train, y_train, x_test, y_test, train=False)

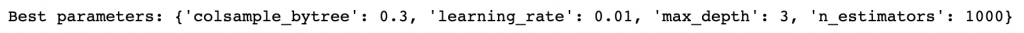

另外一个常见的tuning方法是使用GridSearch function,两者的差异是grid search会使用所有的参数组合,而RandomizedSearchCV是在每个参数设定的分布中随机配置,这里也放上grid search的方法给大家参考:

from sklearn.model_selection import GridSearchCV

params = { 'max_depth': [3,6,10],

'learning_rate': [0.01, 0.05, 0.1],

'n_estimators': [100, 500, 1000],

'colsample_bytree': [0.3, 0.7]}

xg2 = XGBClassifier(random_state=1)

clf = GridSearchCV(estimator=xg2,

param_grid=params,

scoring='neg_mean_squared_error',

verbose=1)

clf.fit(x_train, y_train)

print("Best parameters:", clf.best_params_)

xg3 = XGBClassifier(colsample_bytree= 0.3, learning_rate=0.01, max_depth= 3, n_estimators=1000)

xg3=xg3.fit(x_train, y_train)

score(xg3, x_train, y_train, x_test, y_test, train=False)

reference:

https://www.analyticsvidhya.com/blog/2016/03/complete-guide-parameter-tuning-xgboost-with-codes-python/

https://towardsdatascience.com/xgboost-fine-tune-and-optimize-your-model-23d996fab663

https://xgboost.readthedocs.io/en/latest/python/python_api.html#xgboost.XGBClassifier

<<: 【C language part 1】浅谈 C 语言-认识C

>>: JavaScript学习日记 : Day9 - 执行环境(Execution Context)

Day3 跟着官方文件学习Laravel-来一个登入画面

今天的目标是我要透过浏览器送出一段路径後,要在我的页面能够接收到我的登入画面 在官方文件的一开始说到...

R语言个人小笔记

快期末考了在这边做个笔记方便我观看哈哈 Data Component Data Frame &...

[C#] LeetCode 5. Longest Palindromic Substring

Given a string s, return the longest palindromic s...

『比昨天的自己还要好』的菜鸟工程师

回顾 30天咻的一下就过去了,第一次的铁人赛暂时画下小句点,那些期待补的篇幅我没忘(?) 漂向何处 ...

Day 29 | 关於像素那档事

看到目前为止,能够发现到影像辨识可说是深度学习应用中相当热门且实用的一个项目,然而如果要了解其中的运...