【3】训练前先暖身 - 学习率 Warm-up 策略

Warm-up 训练是由这篇 Paper 提出的一种方法,主要的想法是提供模型在正式训练前,做一个类似暖机的动作,由於模型在初始状态时,过高的学习率容易导致模型不稳定,所以会先逐步增加学习率倒打壹定的 epochs 後,再进行正常的训练。有关 Warm-up 较细节的解说可以参考前人的这篇文章。

我们假设 warmup epochs 为5个,学习率为0.1,那麽在前五个 epochs 会得到学习率分别为[0.2, 0.4, 0.6, 0.8, 1.0]的数值,而在 warmup 结束後,学习率又回从[0.1, 0.0999, 0.0996...]开始慢慢递减。

第一个实验我们就开始准备 warmup 训练,warmup 训练的学习率衰减有两种策略,分别是 sin-decay 和 gradual-decay,第一个实验我使用 sin 函数作为学习率衰退。

LR = 0.1

WARMUP_EPOCH = 5

model = alexnet_modify()

model.compile(

optimizer=tf.keras.optimizers.SGD(LR),

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=[tf.keras.metrics.SparseCategoricalAccuracy()],

)

def scheduler(epoch, lr):

if epoch < WARMUP_EPOCH:

warmup_percent = (epoch+1) / WARMUP_EPOCH

return LR * warmup_percent

else:

return np.sin(lr)

callback = tf.keras.callbacks.LearningRateScheduler(scheduler,verbose=1)

history = model.fit(

ds_train,

epochs=EPOCHS,

validation_data=ds_test,

callbacks=[callback],

verbose=True)

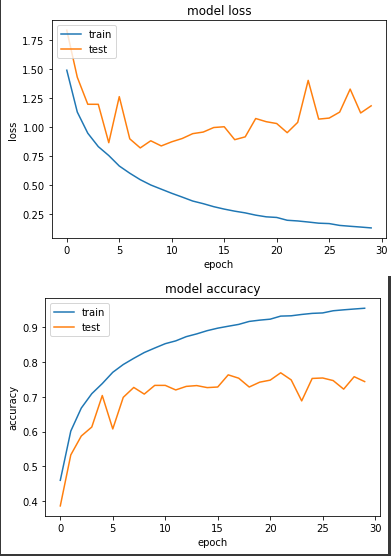

得出的结果:

前五个 warm-up:

Epoch 00001: LearningRateScheduler 0.020000000000000004.

loss: 1.4896 - sparse_categorical_accuracy: 0.4602 - val_loss: 1.8346 - val_sparse_categorical_accuracy: 0.3867

Epoch 00002: LearningRateScheduler 0.04000000000000001.

loss: 1.1285 - sparse_categorical_accuracy: 0.6014 - val_loss: 1.4291 - val_sparse_categorical_accuracy: 0.5330

Epoch 00003: LearningRateScheduler 0.06.

loss: 0.9458 - sparse_categorical_accuracy: 0.6674 - val_loss: 1.1956 - val_sparse_categorical_accuracy: 0.5872

Epoch 00004: LearningRateScheduler 0.08000000000000002.

loss: 0.8303 - sparse_categorical_accuracy: 0.7087 - val_loss: 1.1954 - val_sparse_categorical_accuracy: 0.6130

Epoch 00005: LearningRateScheduler 0.1.

loss: 0.7531 - sparse_categorical_accuracy: 0.7377 - val_loss: 0.8639 - val_sparse_categorical_accuracy: 0.7034

最好:

Epoch 00022: LearningRateScheduler 0.09727657241617098.

loss: 0.1944 - sparse_categorical_accuracy: 0.9312 - val_loss: 0.9504 - val_sparse_categorical_accuracy: 0.7686

最终:

Epoch 00030: LearningRateScheduler 0.09606978125185672.

loss: 0.1284 - sparse_categorical_accuracy: 0.9538 - val_loss: 1.1822 - val_sparse_categorical_accuracy: 0.7434

和前一天的实验三相比,准确度并没有比较好,前五个 warm-up epoch 也没有进步的比较快。

第二个实验使用 gradual-decay ,和第一个不同的是它的衰退呈现指数型。

LR = 0.1

WARMUP_EPOCH = 5

model = alexnet_modify()

model.compile(

optimizer=tf.keras.optimizers.SGD(LR),

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=[tf.keras.metrics.SparseCategoricalAccuracy()],

)

def scheduler(epoch, lr):

if epoch < WARMUP_EPOCH:

warmup_percent = (epoch+1) / WARMUP_EPOCH

return LR * warmup_percent

else:

return lr**1.0001

callback = tf.keras.callbacks.LearningRateScheduler(scheduler,verbose=1)

history = model.fit(

ds_train,

epochs=EPOCHS,

validation_data=ds_test,

callbacks=[callback],

verbose=True)

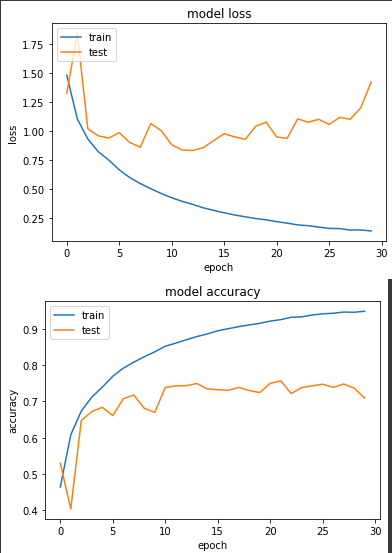

得出的结果:

前五个 warm-up:

Epoch 00001: LearningRateScheduler 0.020000000000000004.

loss: 1.4836 - sparse_categorical_accuracy: 0.4637 - val_loss: 1.3275 - val_sparse_categorical_accuracy: 0.5295

Epoch 00002: LearningRateScheduler 0.04000000000000001.

loss: 1.1076 - sparse_categorical_accuracy: 0.6081 - val_loss: 1.8452 - val_sparse_categorical_accuracy: 0.4032

Epoch 00003: LearningRateScheduler 0.06.

loss: 0.9335 - sparse_categorical_accuracy: 0.6735 - val_loss: 1.0221 - val_sparse_categorical_accuracy: 0.6477

Epoch 00004: LearningRateScheduler 0.08000000000000002.

loss: 0.8239 - sparse_categorical_accuracy: 0.7122 - val_loss: 0.9601 - val_sparse_categorical_accuracy: 0.6721

Epoch 00005: LearningRateScheduler 0.1.

loss: 0.7514 - sparse_categorical_accuracy: 0.7395 - val_loss: 0.9415 - val_sparse_categorical_accuracy: 0.6840

最好:

Epoch 00022: LearningRateScheduler 0.0996090197648015.

loss: 0.2075 - sparse_categorical_accuracy: 0.9262 - val_loss: 0.9396 - val_sparse_categorical_accuracy: 0.7571

最终:

Epoch 00030: LearningRateScheduler 0.09942532736309981.

loss: 0.1406 - sparse_categorical_accuracy: 0.9493 - val_loss: 1.4240 - val_sparse_categorical_accuracy: 0.7097

和上面的结果差不多,也仍不及前一天的实验三。会有这样的结果也不意外,因为前一天的实验三,我们的学习率在0.1变成0.01时很刚好的有个明显loss下降的特徵,而且这边的 learning rate decay 策略(sin和gradual都是)在 learning rate 的下降幅度都非常小,到第30个 epoch 时,learning rate 仍接近0.1,故我认为 warm-up 策略在多个 epoch 或者是在 warm-up epochs 之後,改用以每个 step 来下降会更为适合!

<<: Day3-React Hook 篇-认识 useEffect

Clean Code系列笔记-原则篇

本文同步发表於个人部落格 前言: 近期在开发公司内部产品系统时,写完後再进行功能测试时,往往会遇到蛮...

Day13:内建的 suspend 函式,好函式不用吗? (2)

withContext suspend fun<T> withContext(conte...

(World N0-1)! To Pass LookML-Developer Exam Guide

50% Discount On Google Updated LookML-Developer Ex...

Day 25 用 WebMock + VCR 来实作测试

该文章同步发布於:我的部落格 WebMock 以及 VCR 是拿来实作关於网站请求的工具,在这篇文...

Day 12 - 下单电子凭证及Stock股票Order建立

本篇重点 api.activate_ca 启动下单电子凭证 Stock股票Order建立 api.a...