[常见的自然语言处理技术] N-Gram Model 与关键字预测 (II)

前言

上次我们提到,语言模型( language model, LM )就是赋予一段文句机率值。

在自然语言处理的许多情境中皆仰赖语言模型:

- 拼字检查( Spell Correction ):

P("I spent five minutes reading the article.") > P("I spent five minutes readnig the article.") - 语音辨别( Speech Recognition ):

P("I saw a van.") >> P("eyes awe of an") - 文字预测( Text Prediction ):

如手机输入法单词预测:

P("Do you want to go to the store") > P("Do you want to go to the gym") > P("Do you want to go to the bank")

图片来源:PhoneArena.com

N元语法语言模型(N-Gram Language Models)

名词定义: n-gram 就是n个连续单词构成的序列,例如 "store" 就是 1-gram、 "the store" 就是 2-gram、 "to the store" 就是 3-gram ,以此类推。

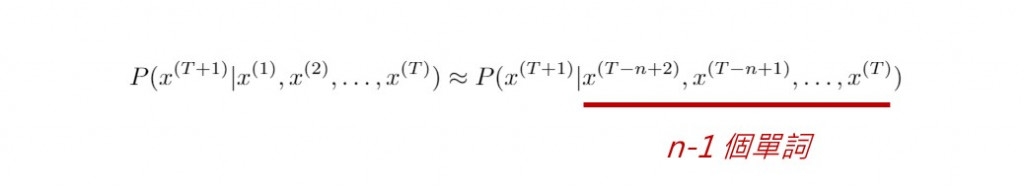

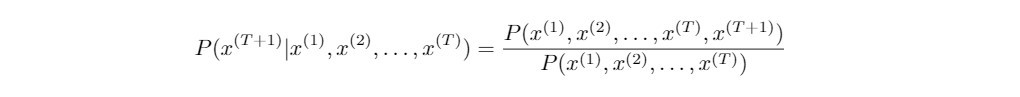

假设我们输入 "Do you want to go to the" ,语言模型就会根据背後的演算法来预测下一个字将会是什麽?考虑一种可能的情况: "Do you want to go to the store" 。语言模型将会计算条件机率 P("store" | "Do you want to go to the") ,如下所示:

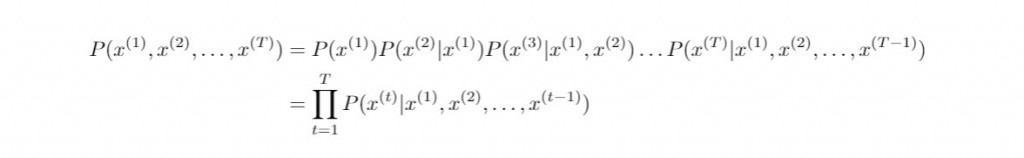

以我们的例子而言,*"Do", "you", ..., "the"*是已经给定的单词序列,而待预测的下一个单词 "store" 则须选自事先建立好的词汇库。上述的左式并不好实际计算,但是等号的右式则值得讨论,因此理论上我们把语言模型转化为估计以下T元词组的机率值:

来点机率模型

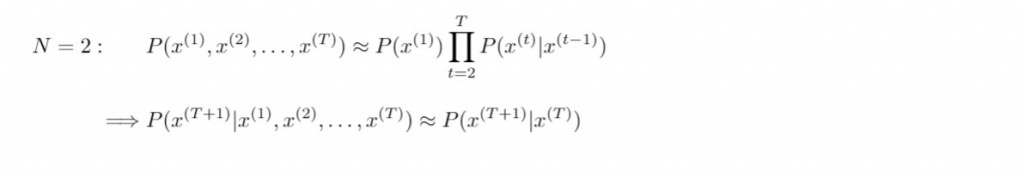

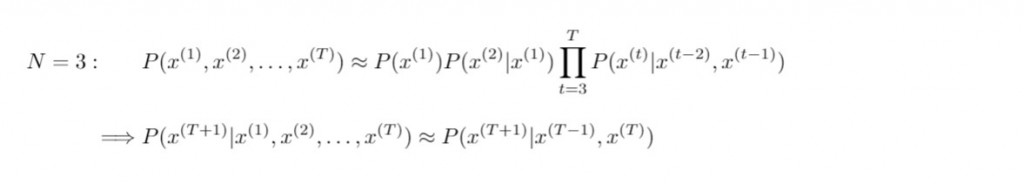

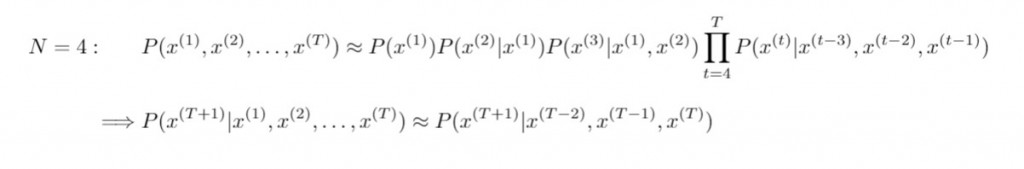

N元语法估计长度为 N 单词之序列的机率测度,进而由 N-1 个已知的连续单词序列( 历史字串构成了 N-1 阶马可夫链 ),从而预测下一个出现的单词。根据不同的参数 N ,我们可以整理出下列 N-gram 模型(N>4的情形以此类推):

- Unigram:

我们从而计算近似机率值 P("store") 。 - Bigram:

我们从而计算近似机率值 P("store" | "the") 。 - Trigram:

我们从而计算近似机率值 P("store" | "to the") 。 - 4-gram:

我们从而计算近似机率值 P("store" | "go to the") 。

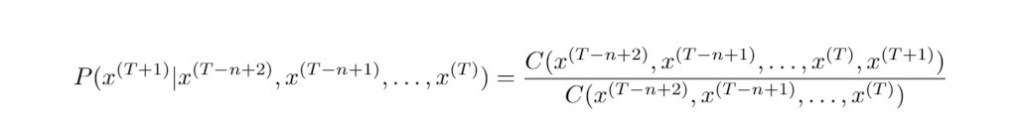

n-gram model 即是计算以下右侧的条件机率:

昨天我们提到,右侧的条件机率为n元词组与(n-1)元词组

出现的个数相除而得的比值,也一种相对频率的概念。

相对频率用来计算条件机率:

讲了太多理论,想必早已令萤幕前的你昏昏欲睡吧!来点Python程序码醒醒脑吧!

如何计算 N-Grams

在正式利用 n-gram model 来预测关键字之前,我们先来计算文章中出现最多次的 n-grams 吧!和之前一样,我们引用了BBC新闻 Facebook Under Fire Over Secret Teen Research 做为文本资料。

首先引入必要的模组以及在 NTLK 中已经定义好的函式 ngrams:

from collections import Counter

from nltk.util import ngrams

from preprocess_text import preprocess, clean_text # user-defined functions

接下来载入新闻全文当作原始资料并进行清理以及断词(不需要先进行断句):

# Load the news article as raw text data

with open("bbc_news.txt", 'r') as f:

raw_news = f.read()

# Clean and tokenise raw text

tokenised = preprocess(clean_text(raw_news))

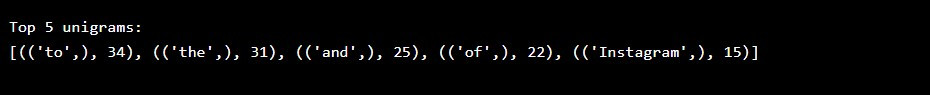

计算最高频率的五个 1-grams :

# obtain all unigrams

news_unigrams = ngrams(tokenised, 1)

# count occurrences of each unigram

news_unigrams_freq = Counter(news_unigrams)

# review top 5 frequent unigrams in the news

print("Top 5 unigrams:\n{}".format(news_unigrams_freq.most_common(5)))

印出结果:

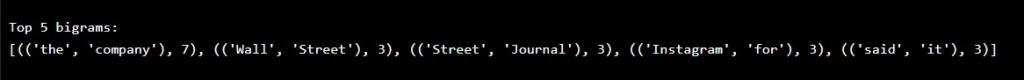

计算最高频率的五个 2-grams :

# obtain all bigrams

news_bigrams = ngrams(tokenised, 2)

# count occurrences of each bigram

news_bigrams_freq = Counter(news_bigrams)

# review top 5 frequent bigrams in the news

print("Top 5 bigrams:\n{}".format(news_bigrams_freq.most_common(5)))

印出结果:

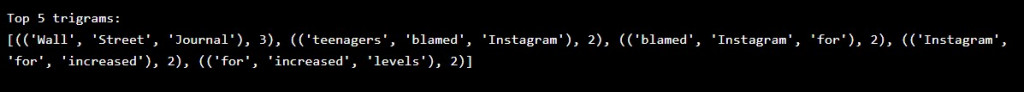

计算最高频率的五个 3-grams :

# obtain all trigrams

news_trigrams = ngrams(tokenised, 3)

# count occurrences of each trigram

news_trigrams_freq = Counter(news_trigrams)

# review top 5 frequent trigrams in the news

print("Top 5 trigrams:\n{}".format(news_trigrams_freq.most_common(5)))

印出结果:

现在我们对於 n-grams 的相对频率有一点感觉了,我们就用 n-gram model 预测关键下一个单词的机制来写一篇虚构的短文吧!

随机短文生成

我们先利用 n-gram 为基础定义一个马可夫链文字生成器类别:

import nltk, re, random

from nltk.tokenize import word_tokenize

from collections import defaultdict, deque, Counter

import itertools

class MarkovChain:

def __init__(self, sequence_length = 3, seeded = False):

self.lookup_dict = defaultdict(list)

self.most_common = list()

self.seq_len = sequence_length

self._seeded = seeded

self.__seed_me()

def __seed_me(self, rand_seed = None):

if self._seeded is not True:

try:

if rand_seed is not None:

random.seed(rand_seed)

else:

random.seed()

self._seeded = True

except NotImplementedError:

self._seeded = False

def add_document(self, str):

"""

str: string of raw text data

"""

preprocessed_list = self._preprocess(str)

self.most_common = Counter(preprocessed_list).most_common(50)

pairs = self.__generate_tuple_keys(preprocessed_list)

for pair in pairs:

self.lookup_dict[pair[0]].append(pair[1])

def _preprocess(self, str):

cleaned = re.sub(r"\W+", ' ', str).lower()

tokenized = word_tokenize(cleaned)

return tokenized

def __generate_tuple_keys(self, data):

if len(data) < self.seq_len:

return

for i in range(len(data) - 1):

yield [data[i], data[i + 1]]

def generate_text(self, max_length = 15):

context = deque()

output = list()

if len(self.lookup_dict) > 0:

self.__seed_me(rand_seed = len(self.lookup_dict))

# This puts the first word in the text the first place of predictive text

chain_head = [list(self.lookup_dict)[0]]

context.extend(chain_head)

if self.seq_len > 1:

while len(output) < (max_length - 1):

next_choices = self.lookup_dict[context[-1]]

if len(next_choices) > 0:

next_word = random.choice(next_choices)

context.append(next_word)

output.append(context.popleft())

else:

break

output.extend(list(context))

else:

while len(output) < (max_length - 1):

next_choices = [word[0] for word in self.most_common]

next_word = random.choice(next_choices)

output.append(next_word)

print("context: {}".format(context))

return ' '.join(output)

def get_most_common_ngrams(self, n = 5):

print("The most common {} {}-grams: {}".format(n, self.seq_len, self.most_common[:n]))

def get_lookup_dict(self, n = 10):

print("Lookup dict: (showing the former {} pairs only)\n{}".format(n, dict(itertools.islice(self.lookup_dict.items(), n))))

我们一样引入上述的BBC新闻当作原始文本:

# Load news article as raw text

with open("bbc_news.txt", 'r') as f:

raw_news = f.read()

接着我们创造一个以 2-gram 设计的马可夫链产生器物件:

my_markov = MarkovChain(sequence_length = 2, seeded = True)

my_markov.add_document(raw_news)

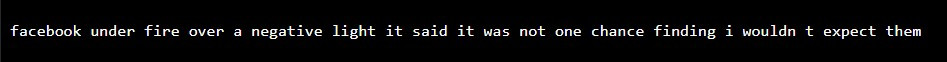

试着生成一个由20个单词所组成的段落:

random_news = my_markov.generate_text(max_length = 20)

print(random_news)

段落生成结果:

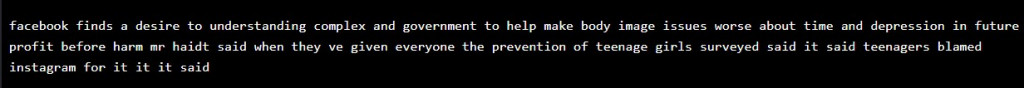

有点不知所云?我们试着用 3-gram 来生成段落,这时候单词数选择50:

my_markov = MarkovChain(sequence_length = 3, seeded = True)

my_markov.add_document(raw_news)

random_news = my_markov.generate_text(max_length = 50)

print(random_news)

检视一下生成的段落:

我们可以发现,利用统计文本中 n-gram models 生成的文本,虽然在局部看来和不论是在语意或文法上并不会太不自然,但是整体而言却显得有些别扭、词不达意。这变是 statistical approaches 的缺点,而今日的语言预测加入了类神经网络,大大拉近了模型预测的结果与自然语言的差距,甚至连身为使用者的我们都常分辨不出真伪呢!

今日的介绍就到此告一段落,祝各位连假愉快,晚安!

阅读更多

<<: 18.unity实例化(上)(Instantiate)

聊天室(下)- 图文混排的实现

缘由: 前篇讲完布局,这篇讲讲我卡住最久的部分,想流畅地在打字时插入自订的表情符号,一开始是有些目标...

Day 28 [整理03] JavaScript类数组

甚麽是类数组 先来打造一个很酷的数组 let obj = { '0': 'a', '1': 'b',...

python30天-DAY29-Matplotlib(4)

最後一天了,我来补充一些 Matplotlib 的小细节。 tick_params() 用於将格子边...

[Day18] 团队管理:OKR & OGSM

OKR 目标影响我们的可能性与看事情的视角 OKR,目标与关键成果(Objective and Ke...

Day 30 | 铁人赛完赛,切版之路未完 - 结语

第一次挑战铁人赛就写两边, 老实说有想过两边就贴一样的结语, 但想想还是有所不同, 决定还是乖乖的写...