[Day 29] 应用三:开发Easy Eye App

接续昨天的内容,我们今天先测试一下各个utils功能是否可以正常运作:

- 在

applications/easy-eye-app目录下新增一个档案test.py,用来撰写测试程序 - 在

applications/easy-eye-app/utils目录下新增一个档案__init__.py(注意是两个"底线"),用来宣告该目录是"套件目录" - 准备人脸测试用图片,复制到

application/easy-eye-app目录下,命名为sample_1.jpg

face_detector.py

- 开启

test.py,撰写下面的测试程序码:import unittest import cv2 from utils.face_detector import FaceDetector class TestClass(unittest.TestCase): def setUp(self): self.face_detector = FaceDetector() self.img = cv2.imread("sample_1.jpg") def test_face_detector(self): rects = self.face_detector.detect(self.img) # 应该只有一张人脸被辨识 assert len(rects) == 1 # 每个人脸的bounding box有四个点 (top, right, bottom, left) assert hasattr(rects[0], "top") assert hasattr(rects[0], "right") assert hasattr(rects[0], "bottom") assert hasattr(rects[0], "left") - 开启terminal,切换到

application/easy-eye-app目录下,输入python -m unittest test.py测试看看是否我们的方法运作正常$ python -m unittest test.py . ---------------------------------------------------------------------- Ran 1 test in 2.770s OK

landmark_detector.py

- 一样开启

test.py档案 - 汇入

from utils.landmark_detector import LandmarkDetector - 在

setup方法里面加入self.landmark_detector = LandmarkDetector(68) - 新增

test_landmark_detector方法:rects = self.face_detector.detect(self.img) shapes = self.landmark_detector.detect(self.img, rects) # 应该只有一张人脸被辨识 assert len(shapes) == 1 # 人脸关键点应该有68个点 assert len(shapes[0]) == 68 - 一样输入

python -m unittest test.py$ python -m unittest test.py .. ---------------------------------------------------------------------- Ran 2 tests in 5.183s OK

head_pose_estimator.py

- 开启

test.py档案 - 汇入

from utils.head_pose_estimator import HeadPoseEstimator - 新增

test_head_pose_estimator方法 - 最後完整程序码

import unittest import cv2 from utils.face_detector import FaceDetector from utils.head_pose_estimator import HeadPoseEstimator from utils.landmark_detector import LandmarkDetector class TestClass(unittest.TestCase): def setUp(self): self.img = cv2.imread("sample_1.jpg") self.face_detector = FaceDetector() self.landmark_detector = LandmarkDetector(68) self.head_pose_estimator = HeadPoseEstimator(self.img.shape[1], self.img.shape[0]) def test_face_detector(self): rects = self.face_detector.detect(self.img) # 应该只有一张人脸被辨识 assert len(rects) == 1 # 每个人脸的bounding box有四个点 (top, right, bottom, left) assert hasattr(rects[0], "top") assert hasattr(rects[0], "right") assert hasattr(rects[0], "bottom") assert hasattr(rects[0], "left") def test_landmark_detector(self): rects = self.face_detector.detect(self.img) shapes = self.landmark_detector.detect(self.img, rects) # 应该只有一张人脸被辨识 assert len(shapes) == 1 # 人脸关键点应该有68个点 assert len(shapes[0]) == 68 def test_head_pose_estimator(self): rects = self.face_detector.detect(self.img) shapes = self.landmark_detector.detect(self.img, rects) for shape in shapes: pts = self.head_pose_estimator.head_pose_estimate(shape) # 共有四个点 (两两相对,分别对应垂直与水平方向角度判断用) assert len(pts) == 4 # 每个点都是tuple: (x, y) assert [type(pt) == tuple and len(pt) == 2 for pt in pts] - 一样透过

python -m unittest test.py测试:$ python -m unittest test.py ... ---------------------------------------------------------------------- Ran 3 tests in 6.767s OK

到此,我们完成了各功能的unit test,增加了一点信心後,继续开发我们主要的逻辑吧!

主程序撰写

- 新增

main.py档案到application/easy-eye-app目录 - 程序码与说明如下:

import math import time import cv2 import imutils from imutils import face_utils from imutils.video import WebcamVideoStream from scipy.spatial import distance as dist from utils.face_detector import FaceDetector from utils.head_pose_estimator import HeadPoseEstimator from utils.landmark_detector import LandmarkDetector PROPERTIES = { # 眨眼运动 "exercise_1": { # 是否已完成 "is_completed": False, # 每次完成眨眼,重新判断 "re_check": False, # 完成次数 "completed_times": 0, # 总次数 "total_times": 2, # 使用眼睛长宽比来判断是否为闭上眼睛 "eye_ar_thresh": 0.3, # 经过多少个frames才判断不是"正常眨眼动作" "eye_ar_consec_frames": 25, # 计算闭眼经过frames "eye_ar_consec_counter": 0 }, # 眼球运动 "exercise_2": { # 是否已完成 "is_completed": False, # 目前眼球看的方向 "look_direction": None, # 眼球看的方向计算frame "look_count": 0, # 眼球看的方向需要的frames "look_max_count": 25 } } def eye_aspect_ratio(eye): # 计算两个垂直方向的距离 A = dist.euclidean(eye[1], eye[5]) B = dist.euclidean(eye[2], eye[4]) # 计算水平方向的距离 C = dist.euclidean(eye[0], eye[3]) # 计算眼睛长宽比 (EAR) ear = (A + B) / (2.0 * C) return ear def get_pupil(frame, eyeHull): (x, y, w, h) = cv2.boundingRect(eyeHull) roi = frame[y:y + h, x:x + w] gray = cv2.cvtColor(roi, cv2.COLOR_BGR2GRAY) gray = cv2.equalizeHist(gray) gray = cv2.GaussianBlur(gray, (5, 5), 0) thresh = cv2.threshold(gray, 40, 255, cv2.THRESH_BINARY_INV)[1] cnts = cv2.findContours(thresh, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE) cnts = imutils.grab_contours(cnts) if len(cnts) > 0: cnts = max(cnts, key=cv2.contourArea) (_, radius) = cv2.minEnclosingCircle(cnts) if radius > 2: M = cv2.moments(cnts) if M["m00"] > 0: center = (int(M["m10"] / M["m00"]), int(M["m01"] / M["m00"])) return int(x + center[0]), int(y + center[1]) # 计算眼球水平方向的位置比例 def horizontal_ratio(eyePupil, eye): return (eyePupil[0] - eye[0][0]) / (eye[3][0] - eye[0][0]) # 计算眼球垂直方向的位置比例 def vertical_ratio(eyePupil, eye): top = (eye[1][1] + eye[2][1]) / 2 bottom = (eye[4][1] + eye[5][1]) / 2 # do not calculate the ratio if pupil detect out of bound if eyePupil[1] < top or eyePupil[1] > bottom: return 0.5 return (eyePupil[1] - top) / (bottom - top) def main(): (lStart, lEnd) = face_utils.FACIAL_LANDMARKS_IDXS["left_eye"] (rStart, rEnd) = face_utils.FACIAL_LANDMARKS_IDXS["right_eye"] face_aligned = False face_aligned_times = 0 face_aligned_max_times = 20 # 启动WebCam print("[INFO] starting webcam...") vs = WebcamVideoStream().start() time.sleep(2.0) first_frame = vs.read() face_detector = FaceDetector() landmark_detector = LandmarkDetector(68) head_pose_estimator = HeadPoseEstimator(first_frame.shape[1], first_frame.shape[0]) while True: frame = vs.read() rects = face_detector.detect(frame) shapes = landmark_detector.detect(frame, rects) for shape in shapes: (x, y, w, h) = face_utils.rect_to_bb(rects[0]) if w < 170: cv2.rectangle(frame, (x, y), (x + w, y + h), (0, 255, 0), 2) cv2.putText(frame, f"Please get closely to the camera :)", (10, 30), cv2.FONT_HERSHEY_SIMPLEX, 0.7, (255, 0, 0), 2) break leftEye = shape[lStart:lEnd] rightEye = shape[rStart:rEnd] leftEAR = eye_aspect_ratio(leftEye) rightEAR = eye_aspect_ratio(rightEye) ear = (leftEAR + rightEAR) / 2.0 # 还未完成第一个训练 if not PROPERTIES["exercise_1"]["is_completed"]: # 如果是闭眼 if ear < PROPERTIES["exercise_1"]["eye_ar_thresh"] and not PROPERTIES["exercise_1"]["re_check"]: PROPERTIES["exercise_1"]["eye_ar_consec_counter"] += 1 # 计算闭眼是否达到一定时间 if PROPERTIES["exercise_1"]["eye_ar_consec_counter"] >= PROPERTIES["exercise_1"]["eye_ar_consec_frames"]: PROPERTIES["exercise_1"]["completed_times"] += 1 PROPERTIES["exercise_1"]["eye_ar_consec_counter"] = 0 PROPERTIES["exercise_1"]["re_check"] = True # 如果是睁开眼睛 elif ear > PROPERTIES["exercise_1"]["eye_ar_thresh"] and PROPERTIES["exercise_1"]["re_check"]: PROPERTIES["exercise_1"]["re_check"] = False PROPERTIES["exercise_1"]["eye_ar_consec_counter"] = 0 # 判断是否完成第一个训练 if PROPERTIES["exercise_1"]["completed_times"] == PROPERTIES["exercise_1"]["total_times"]: PROPERTIES["exercise_1"]["is_completed"] = True # 还未完成第二个训练 if PROPERTIES["exercise_1"]["is_completed"] and not PROPERTIES["exercise_2"]["is_completed"]: leftEyeHull = cv2.convexHull(leftEye) rightEyeHull = cv2.convexHull(rightEye) leftPupil = get_pupil(frame, leftEyeHull) rightPupil = get_pupil(frame, rightEyeHull) # 先做脸部对齐 if not face_aligned and face_aligned_times < face_aligned_max_times: (v_p1, v_p2, h_p1, h_p2) = head_pose_estimator.head_pose_estimate(shape) try: # 垂直脸部角度判断 m = (v_p2[1] - v_p1[1]) / (v_p2[0] - v_p1[0]) ang1 = int(math.degrees(math.atan(m))) except: ang1 = 90 try: # 水平脸部角度判断 m = (h_p2[1] - h_p1[1]) / (h_p2[0] - h_p1[0]) ang2 = int(math.degrees(math.atan(-1 / m))) except: ang2 = 90 if -80 <= ang1 <= -20 and -50 <= ang2 <= 50: face_aligned_times += 1 cv2.putText(frame, f"face aligned!", (550, 10), cv2.FONT_HERSHEY_SIMPLEX, 0.3, (255, 255, 0), 2) else: face_aligned_times = 0 else: face_aligned = True # 找到眼球 if leftPupil is not None and rightPupil is not None and face_aligned: left_hor = horizontal_ratio(leftPupil, leftEye) right_hor = horizontal_ratio(rightPupil, rightEye) left_ver = vertical_ratio(leftPupil, leftEye) right_ver = vertical_ratio(rightPupil, rightEye) ratio_hor = (left_hor + right_hor) / 2 ratio_ver = (left_ver + right_ver) / 2 if PROPERTIES["exercise_2"]["look_direction"] is None: cv2.putText(frame, f"Move your eyeball from ", (10, 30), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 255, 255), 2) cv2.putText(frame, f"Top ", (204, 30), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 0, 255), 2) cv2.putText(frame, f"=> Left => Bottom => Right", (239, 30), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 255, 255), 2) elif PROPERTIES["exercise_2"]["look_direction"] == "Top": cv2.putText(frame, f"Move your eyeball from Top ", (10, 30), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 255, 255), 2) cv2.putText(frame, f"=> Left ", (239, 30), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 0, 255), 2) cv2.putText(frame, f"=> Bottom => Right", (310, 30), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 255, 255), 2) elif PROPERTIES["exercise_2"]["look_direction"] == "Left": cv2.putText(frame, f"Move your eyeball from Top => Left ", (10, 30), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 255, 255), 2) cv2.putText(frame, f"=> Bottom ", (310, 30), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 0, 255), 2) cv2.putText(frame, f"=> Right", (407, 30), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 255, 255), 2) elif PROPERTIES["exercise_2"]["look_direction"] == "Bottom": cv2.putText(frame, f"Move your eyeball from Top => Left => Bottom ", (10, 30), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 255, 255), 2) cv2.putText(frame, f"=> Right", (407, 30), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 0, 255), 2) elif PROPERTIES["exercise_2"]["look_direction"] == "Right": cv2.putText(frame, f"Completed", (10, 30), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 255, 255), 2) if ratio_ver < 0.1 and (0.4 < ratio_hor < 0.6) and PROPERTIES["exercise_2"]["look_direction"] is None: if PROPERTIES["exercise_2"]["look_count"] < PROPERTIES["exercise_2"]["look_max_count"]: PROPERTIES["exercise_2"]["look_count"] += 1 else: PROPERTIES["exercise_2"]["look_count"] = 0 PROPERTIES["exercise_2"]["look_direction"] = "Top" elif ratio_hor > 0.7 and PROPERTIES["exercise_2"]["look_direction"] == "Top": if PROPERTIES["exercise_2"]["look_count"] < PROPERTIES["exercise_2"]["look_max_count"]: PROPERTIES["exercise_2"]["look_count"] += 1 else: PROPERTIES["exercise_2"]["look_count"] = 0 PROPERTIES["exercise_2"]["look_direction"] = "Left" elif ratio_ver > 0.15 and (0.4 < ratio_hor < 0.6) and PROPERTIES["exercise_2"]["look_direction"] == "Left": if PROPERTIES["exercise_2"]["look_count"] < PROPERTIES["exercise_2"]["look_max_count"]: PROPERTIES["exercise_2"]["look_count"] += 1 else: PROPERTIES["exercise_2"]["look_count"] = 0 PROPERTIES["exercise_2"]["look_direction"] = "Bottom" elif ratio_hor < 0.2 and PROPERTIES["exercise_2"]["look_direction"] == "Bottom": if PROPERTIES["exercise_2"]["look_count"] < PROPERTIES["exercise_2"]["look_max_count"]: PROPERTIES["exercise_2"]["look_count"] += 1 else: PROPERTIES["exercise_2"]["look_count"] = 0 PROPERTIES["exercise_2"]["look_direction"] = "Done" PROPERTIES["exercise_2"]["is_completed"] = True if not PROPERTIES["exercise_1"]["is_completed"]: cv2.putText(frame, f'Blink for 5 secs: ({PROPERTIES["exercise_1"]["completed_times"]}/{PROPERTIES["exercise_1"]["total_times"]})', (10, 30), cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 255, 0), 2) elif PROPERTIES["exercise_1"]["is_completed"] and not PROPERTIES["exercise_2"]["is_completed"] and not face_aligned: cv2.putText(frame, f"Exercise 1 Completed! Please align your face", (10, 30), cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 255, 255), 2) elif PROPERTIES["exercise_1"]["is_completed"] and PROPERTIES["exercise_2"]["is_completed"]: cv2.putText(frame, f"Exercise 2 Completed!, 'r' for re-run ; 'q' for quit...", (10, 30), cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 255, 255), 2) key = cv2.waitKey(1) & 0xFF if key == ord("r"): face_aligned = False face_aligned_times = 0 # exercise 1 variables PROPERTIES["exercise_1"]["is_completed"] = False PROPERTIES["exercise_1"]["re_check"] = False PROPERTIES["exercise_1"]["completed_times"] = 0 PROPERTIES["exercise_1"]["eye_ar_consec_counter"] = 0 # exercise 2 variables PROPERTIES["exercise_2"]["is_completed"] = False PROPERTIES["exercise_2"]["look_direction"] = None PROPERTIES["exercise_2"]["look_count"] = 0 elif key == ord("q"): break cv2.imshow("Frame", frame) cv2.destroyAllWindows() vs.stop() if __name__ == '__main__': main()

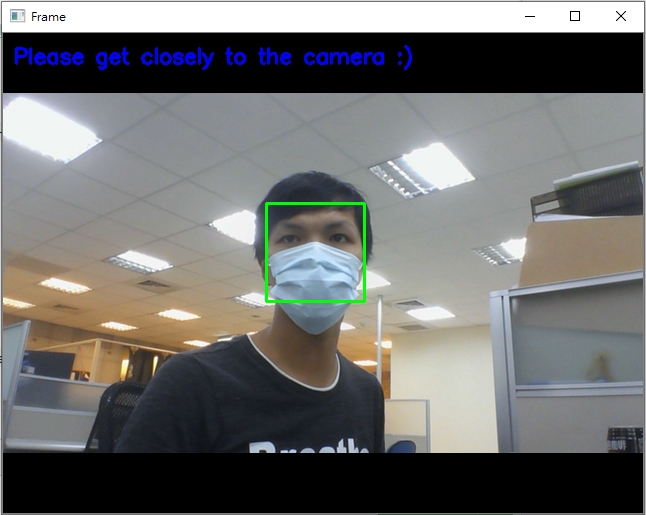

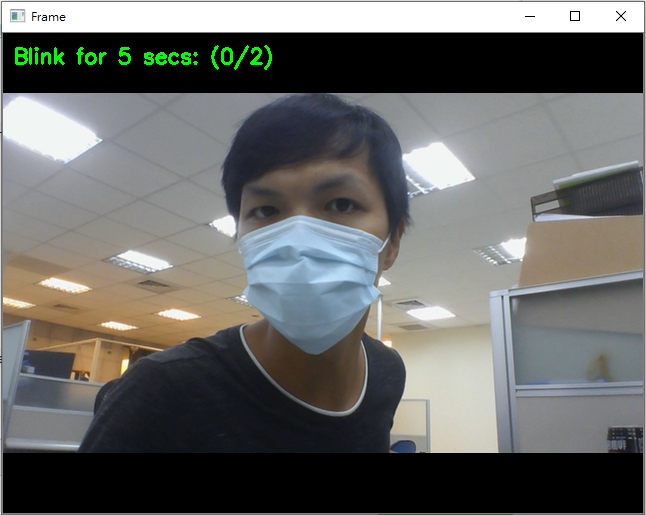

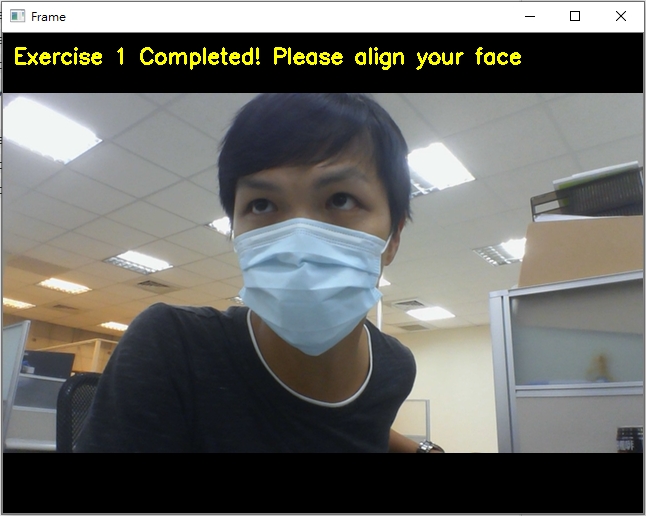

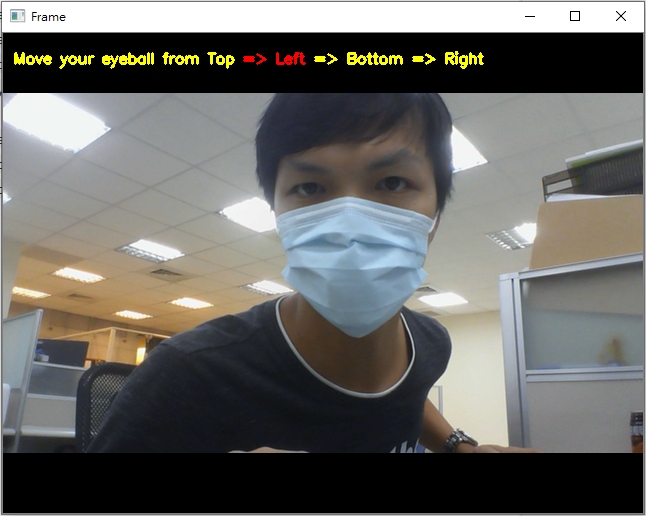

互动的范例如下:

脸部靠近镜头後才开始判断

眨眼5次运动

程序要求脸部要正对镜头

眼球转动(上->左->下->右)运动

这个应用後续还有很多功能可以做:

- 产生远近不一的"物体",让眼部肌肉做放松与拉紧运动

- 增加定时提醒与闹钟等功能

- 将程序打包成手机可以使用的App (如Kivy, Flutter, Google ML Kit等)

你也试着完成,或做一个属於自己的应用吧!

[DAY-16] 找出你珍视的机会

应该给学生一些时间探索各种主题 了解自己对哪些科学感兴趣 没有必要坚守 18 岁的兴趣 或是 25...

Gulp 基础介绍 DAY78

在介绍 gulp 之前 当然需要知道 gulp 它是什麽 简单来说 gulp 就是 基於node.j...

截取Video画面,存成一张张图片Python cv2

找到一个有趣的程序码,改了一下,可截取Video画面,存成一张张图片。 进行中想要中断执行,可按 E...

Day09 - 语音特徵正规化

当一个模型的训练资料和测试资料,彼此之间的资料分布有不匹配(mismatch)时,模 型的性能会出现...

Logger 与 Extension Generator for Kotlin

Logger 在 compile time 的时候,不像我们一般再开发的时候很容易的去 log 一些...